My personal journey into AI started around the time ChatGPT launched, which was almost two years ago.

I was in a different role back then, but I remember feeling really curious about what was happening. It was a fascinating moment.

When Retresco reached out to me with a role, I saw it as a great opportunity to dive deeper into something I was already passionate about.

Since I joined two years ago, so much has happened. AI evolves so quickly. There’s always something new to learn or build.

It’s never boring, which is honestly what keeps it exciting for me. So, let me share my thoughts on where I think AI is taking us.

But first:

Do I believe AI can replace human creativity?

To be honest, sometimes AI surprises me.

There have been moments where I’ve gotten an output that sparked an idea I hadn’t considered.

But still, it’s working with information that already exists. It’s not creating something from pure instinct or emotion.

So, you could argue that AI doesn’t have true human creativity or intuition, and I agree with that.

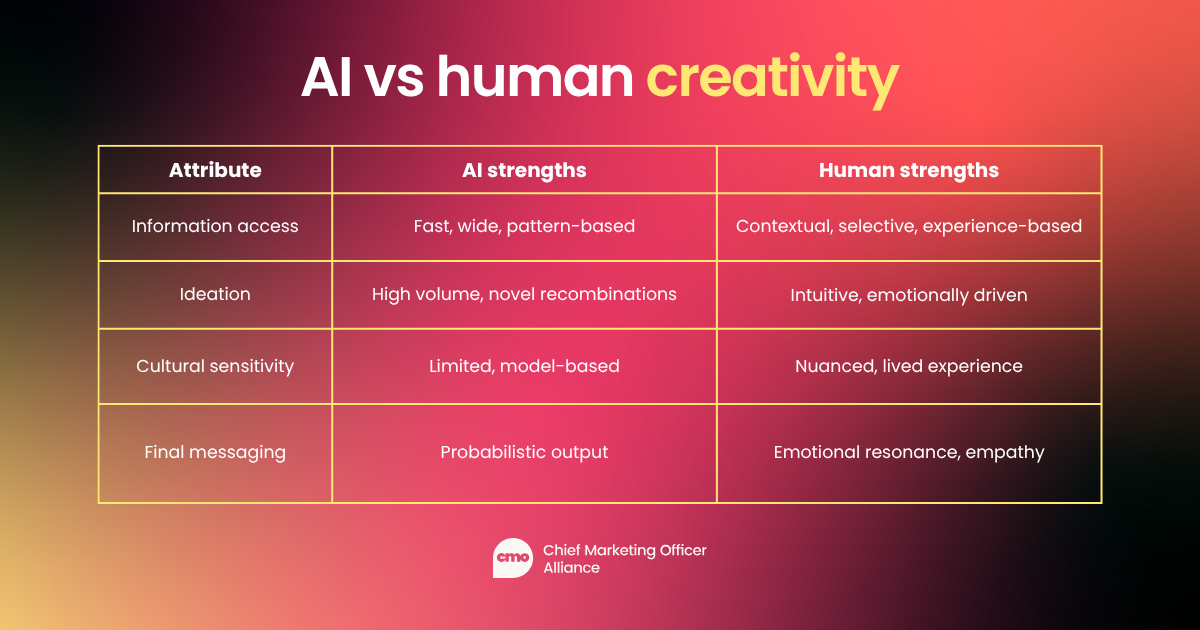

What it does offer is scale.

But there’s a limit: AI is functional.

It generates responses based on probabilities and training data. You can train a model on something specific, like finance or healthcare, and the outputs will feel more accurate or context-aware.

But when it comes to emotional nuance or understanding how different cultures might interpret a message, I don’t think large language models are ready for that. Maybe they never will be.

That final layer of communication, especially in marketing, needs to be human.

The last step—the message that actually connects with someone on a real level—still needs a person behind it.

Which brings me to the next point: can AI stack up against human creativity?

This question comes up a lot, especially when we talk about whether AI can truly compete with the depth and originality of human creativity.

I’ve thought about this quite a bit, and honestly, one thing always stands out to me: even the internet is finite. That’s something we’ve heard from AI leaders, including Sam Altman.

If AI is trained on the internet, then its creative potential is also capped by what already exists. On the other hand, human creativity isn’t bound by that. It’s infinite. That alone makes a strong case for why machines can’t fully replace people in creative work.

Of course, models will keep getting better at how they process and recombine information.

There’s a lot of talk about AGI—artificial general intelligence—and the idea that someday these systems might reason and come up with entirely new ideas. Some think it’s just around the corner. I’m not convinced. I think it’ll take more time. Even now, large language models have plenty of issues:

- There’s a lot of debate around what data should or shouldn’t be used for training.

- Copyright and intellectual property are major concerns.

There was a recent court ruling in the US that dealt with this directly. A group of small digital publishers claimed AI companies were training models on their content without permission. But the court ruled against them. The logic was that if you publish facts on your website, you can’t claim exclusive ownership over them. That decision set a big precedent.

To me, the legal conversation doesn’t address everything.

Even if facts are open to the public, the way a writer expresses them—their tone, their voice, their style—is still something unique. A lot of authors are pushing back, refusing to let their work be used to train models. And I understand that.

At the same time, I’m not against using AI to help with the process. But that’s the double-edged sword we’re dealing with.

On one side, AI can elevate your work. On the other, if you let it take over completely, you risk diluting what makes your voice original.

Can AI-generated content ever feel authentic?

It depends.

Sometimes you can tell right away that something was written by AI, right? There’s a certain structure, a certain rhythm that feels a little too clean or generic.

Other times, especially with more advanced models or more thoughtful prompting, it’s harder to detect. Still, there’s usually something that gives it away.

In media, we’ve seen that audiences trust established outlets more than those that rely fully on AI-generated content. There’s a reason for that. People are aware that AI can generate fake information, and that creates doubt. The risk of misinformation slipping through is real, and that naturally affects how people respond to the content.

However, I recently came across an experiment where researchers trained a model on classic poetry—Shakespeare, Dickinson, and other British poets. Then they had that model generate new poems in the same style.

When a group of readers was asked to tell the difference between the originals and the AI-generated ones, nearly half couldn’t. In some cases, they even preferred the AI version. That was surprising, and it shows that if the model is trained narrowly and well, it can do some impressive things.

Still, when it comes to capturing nuance, I think AI often falls short. That’s why I believe in adding your own layer.

If you know your audience, if you understand the message you want to convey, you’re better off starting with AI but shaping the output with your own input. On platforms like LinkedIn, for instance, I see a lot of people using AI tools to post. That’s fine, especially for broad or low-stakes content.

But if you really want to build a brand, or write something with impact, it’s better to stay involved in the process.

It really depends on your intent. If you’re just sharing something general, AI can help you get it out quickly.

But if you’re aiming for authenticity or emotional resonance, nothing beats a human voice.

What about Generative AI for marketing campaigns?

The answer is it depends.

I’ve seen quite a few campaigns lately (especially on TV) that seem obviously AI-generated.

You notice small things, like the way people move or speak, that signal it's not quite real. And when that happens, I can't help but feel like it's the shortcut version of what could’ve been a great creative piece.

Don’t get me wrong! AI can support some parts of a campaign really well.

But when the whole thing leans too hard on automation, it starts to feel cheap. You lose the nuance that comes from working with actual creatives—animators, copywriters, actors—who understand the emotional layer brands are trying to convey.

There are positive examples, though. I remember a few years back, around the Super Bowl, some brands experimented with AI-generated ad copy and ideas. They asked tools to come up with taglines, scripts, even concepts for how to pitch their products.

The results were hilarious. Sometimes they hit, sometimes they missed entirely, but at least those campaigns embraced the weirdness of it all.

Today, I think audiences are more aware of when AI is being used, and they expect more.

If you go that route, it has to be done really well. Otherwise, it feels like you’re cutting corners. My take is simple: use AI as an assistant, not a replacement.

Let it help with frameworks, research, or variations on messaging, but don’t let it do the whole job.

GenAI can fail, and terribly.

We’ve all seen examples of AI getting it wrong in product demos. Even companies like Google, who launched AI Overviews for search, had demos that showed incorrect information. Something as basic as the time or location of an event was displayed wrong.

Mistakes like that show why it’s important to double-check what AI produces. You still need to be the editor. The human in the loop. Because when it goes off track, it can damage trust very quickly.

But there’s an even darker side to it, too.

There have been more serious cases in the news—like one involving a student who used an AI chatbot and was told, “You are not needed.”

That’s not just a tech fail. That’s dangerous. If a message like that reaches someone who’s vulnerable, it can have real consequences. And unfortunately, we’ve already seen that happen. Some people have been harmed by the output of AI systems that were supposed to be harmless or helpful.

It raises the question: who’s responsible when something like that happens?

Is it the company that built the model? The user who prompted it? Right now, the boundaries aren’t clear. But we need them to be.

So, where does the responsibility lie? I believe it starts with the developers. They’re the ones who understand how the models work: what data they’ve been trained on, what algorithms are in play, how the output is shaped.

But even then, large language models operate like a black box. There’s a lot going on behind the scenes that we can’t fully trace or explain.

At the very least, tech companies need to be transparent about what their systems can and can’t do. They should be building in safety mechanisms, running tests, and making sure there are clear guardrails. If something potentially harmful is about to be generated, the model should know to stop. It’s not enough to say “use at your own risk.” Companies have a role to play in educating users, too.

When OpenAI dissolved its safety team, that sent the wrong signal. We need more people thinking about safety, not fewer.

At the same time, governments and regulators also need to step in. If you want to launch a model in a specific country, you should meet certain standards. You should have to show that you’ve done the work to make it safe.

And finally, as users, we all have a part to play. We need to approach these tools with critical thinking. We can’t assume that just because a response sounds confident, it’s correct.

So really, it’s a shared responsibility.

Developers, regulators, and users all need to be involved. But the burden starts at the top, with the people building these systems.

What gives me hope (and what still worries me)

Looking ahead, there’s a lot that excites me about AI.

As a product marketer, I’m naturally curious about how new tools can solve real problems. Not just in media and publishing, but across industries. I think we’ll continue to discover smart, helpful ways to integrate AI into workflows. Used properly, it really can make our lives easier.

But I’m also concerned about where things might go if we don’t put up the right boundaries. If we rush toward AGI without regulations in place, we could lose control of the narrative entirely.

That’s why I hope we start seeing more government involvement, more cross-industry guidelines, and more public discussion. We need to get ahead of the risks while we still can.

Final thoughts: Test it, but stay critical

Here’s my advice: don’t be afraid to try AI. Just be smart about how you use it.

The only way to get comfortable is by experimenting. Use it to write a bedtime story for your kid with their name and favorite characters. Let it help you with emails or summaries. Explore its capabilities in your day-to-day life. The more you use it, the better you understand its strengths—and its limits.

But always, always bring your own judgment into the mix. AI can be powerful, but it’s not perfect. So test it, enjoy it, but stay aware.

That’s the balance we need to strike.

Download Webflow's Driving marketing team efficiency with AI ebook to streamline complex processes and eliminate roadblocks using AI without missing out on creativity.

.png)

Follow us on LinkedIn

Follow us on LinkedIn